Software Engineering Team Topologies for 2026

A scientific framework for engineering teams in 2026 based on sequential probability networks, AI placement, and neuro-psychometric evaluation.

The Sequential Probability Network

The organizational chart is a lie. It is a relic of the industrial age. It attempts to map a stochastic, non-linear network of human cognition onto a static 19th-century factory hierarchy. For the Chief Technology Officer operating in the AI-augmented era, relying on this artifact is not merely inefficient. It is an act of negligence. The future of Software Engineering Team Topologies for 2026 is not defined by who reports to whom. It is defined by the physics of information flow, the mathematics of sequential probability, and the rigorous evaluation of human cognitive fidelity.

We are exiting the era of intuition. We are entering the era of probability. Teams do not operate as isolated job titles. They work as linked stages in a dependency chain where each effort choice changes what the next person believes is possible. Once Artificial Intelligence enters this chain, the topology must shift. We must move from the "Factory Model" to the "Sequential Probability Network."

This doctrine outlines the scientific reality of building high-performance engineering organizations. It rejects the "Warm Body" compromise. It rejects the "Resume Fallacy." It establishes a rigorous framework based on the O-Ring Invariant, Sequential Effort Incentives, and the Axiom Cortex™ neuro-psychometric evaluation engine.

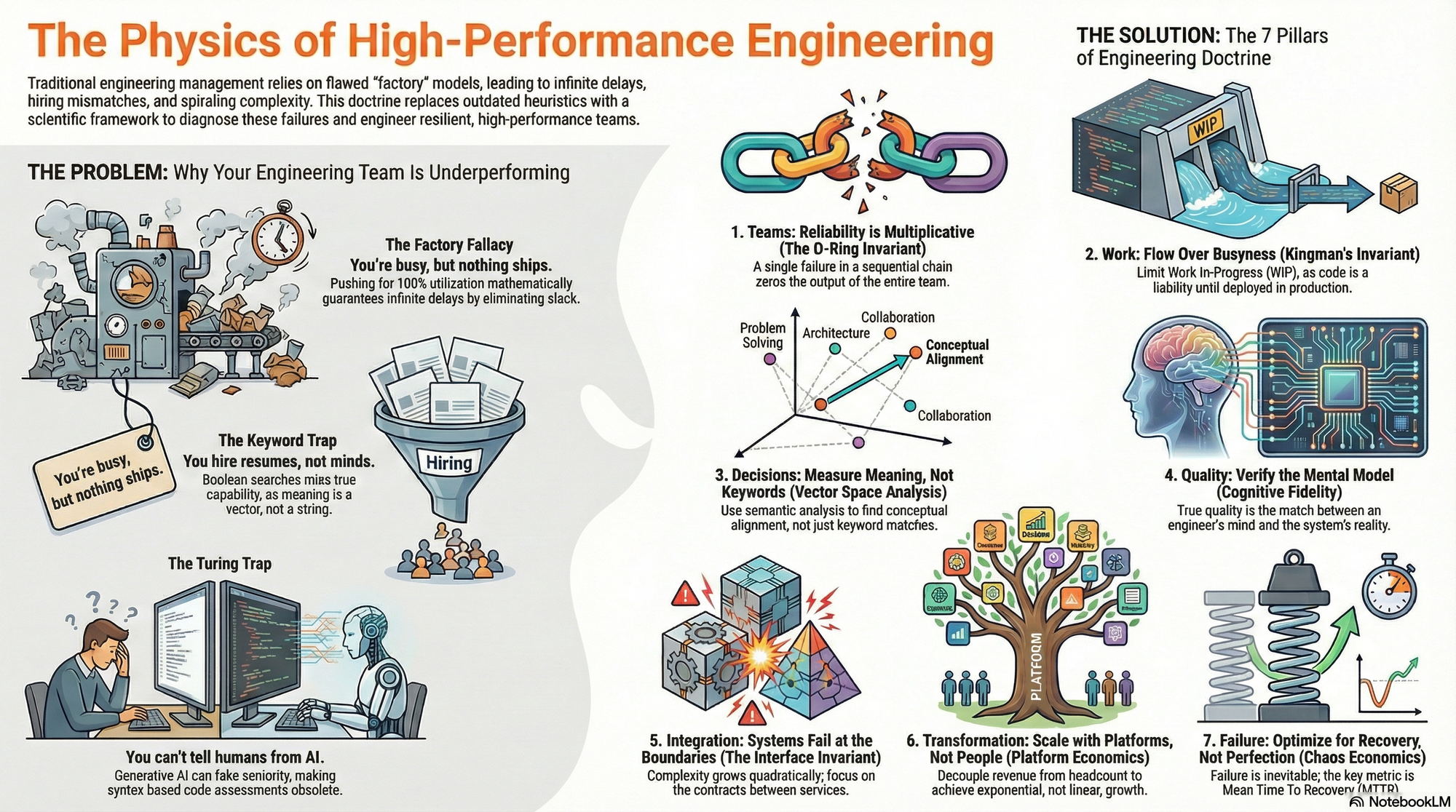

1. The Physics of the Chain: The O-Ring Invariant

The fundamental error in modern engineering management is the application of deterministic manufacturing models to stochastic knowledge work. In a factory, the variance of a task approaches zero. Stamping a widget takes exactly t seconds. If one station fails, the line stops. The failure is visible immediately.

In software engineering, specifically in distributed nearshore environments, the variance is effectively infinite. A task estimated at "one day" may take one hour. It may take one month. This depends on hidden state, legacy debt, and non-deterministic external dependencies. More importantly, a failure at an upstream node does not stop the line immediately. It propagates downstream as "Noise."

The Multiplicative Failure Mode

We posit that engineering teams function under the O-Ring Invariant. This concept is adapted from Michael Kremer’s economic theory. Just as the failure of a single inexpensive O-ring rendered all other perfectly functioning components irrelevant in the Challenger disaster, a failure in a critical upstream engineering node renders downstream brilliance mathematically useless.

In a sequential chain of n workers, the probability of project success (P) is the product of the probabilities of success at each node (pi).

P = ∏ pi

If any pi approaches zero, then P approaches zero. This multiplicative property implies Strict Complementarity. The value of improving one worker's quality depends entirely on the quality of every other worker in the chain. Placing a "10x Engineer" at the end of a chain of junior developers is economic waste. Their multiplier is applied to a base near zero. Conversely, placing that engineer at the start raises the probability ceiling for everyone who follows.

This explains the crushing weight of the monolith. A monolith is a dependency graph where N approaches infinity. The probability of a successful deployment drops to zero because the chain of dependencies is too long to sustain fidelity. See Why Is The Monolith Crushing The Team for a deeper analysis of this collapse.

The Sequential Reactor

The team is a sequential reactor. Value is either added or destroyed at specific gates. What happens at one step shapes the beliefs, risks, and incentives at the next. If the Solutions Architect (t=0) fails, the Backend Engineer (t=1) receives noise. If the Backend Engineer receives noise, their incentive to exert effort drops to zero. Effort applied to noise yields failure.

This explains why distributed teams stay busy but deliver less. They are not lazy. They are rationally conserving energy in the face of upstream entropy. The "Busyness" is a mask for the lack of "Flow." This phenomenon is detailed extensively in Nearshore Platformed.

2. AI Displacement Kinetics: Who Gets Replaced?

The conversation around AI and work often falls into a strange loop. People ask whether machines will replace developers, analysts, or testers. This views the labor market as a collection of disconnected seats. Actual teams do not function that way. A team is a chain of dependencies.

The structure of those dependencies determines whether AI improves output or breaks the system. We must analyze the Incentive Derivative. This measures the ripple effect of wage inflation upstream caused by the change in the shirking probability (ζ).

The End Node: Structurally Exposed

The end of the pipeline behaves differently from every other point in the sequence. When the last worker shirks, the project succeeds with probability pn-1. Adding AI after them is impossible because there is no "after." This means their incentive to shirk is structural. It is determined purely by the project technology.

Replacing the final worker yields pure, clean savings. The principal avoids paying the expected wage and instead pays the fixed AI cost. There is no "Incentive Distortion" propagated upstream because no one is downstream of the end. In Software Engineering Team Topologies for 2026, roles like QA Validation, Data Aggregation, and Logging are structurally tolerant to automation. See Who Gets Replaced and Why for the mathematical proof.

The Middle Node: Structurally Protected

Replacing a middle position disrupts the informational link that peer monitoring depends on. Worker i observes the effort of the previous worker. Worker i+1 observes the effort of i. If position i is filled by AI, both neighbors experience a massive shift in their incentive landscape.

- Upstream Effect: Workers before i realize the middle of the chain is "safe." The AI will always exert effort. This raises their shirking safety. To keep them working, the principal must drastically raise their wages.

- Downstream Effect: Workers after i lose the human signal they relied on. The chain of peer pressure is broken.

The Middle Worker is the "Reference Base" for the team. They provide the context. If you replace the Integration Architect with an AI, you create a chasm. The upstream devs do not know if their code fits. The downstream devs do not know if the specs are valid. The "Structural Weight" of the middle prevents automation. This validates why seniors fail junior tasks when removed from this context. See Why Are Seniors Failing Junior Tasks.

The Managerial Directive

For US CTOs building nearshore pipelines, the model yields a simple map:

- Automate the End: Use AI for synthetic QA and documentation.

- Support the First: Use AI for scaffolding and initialization.

- Protect the Center: Keep humans in architecture and integration roles.

- Use Hybrid Policies: Probabilistic automation maintains upstream discipline.

This strategy is essential for effective AI Placement in Pipelines.

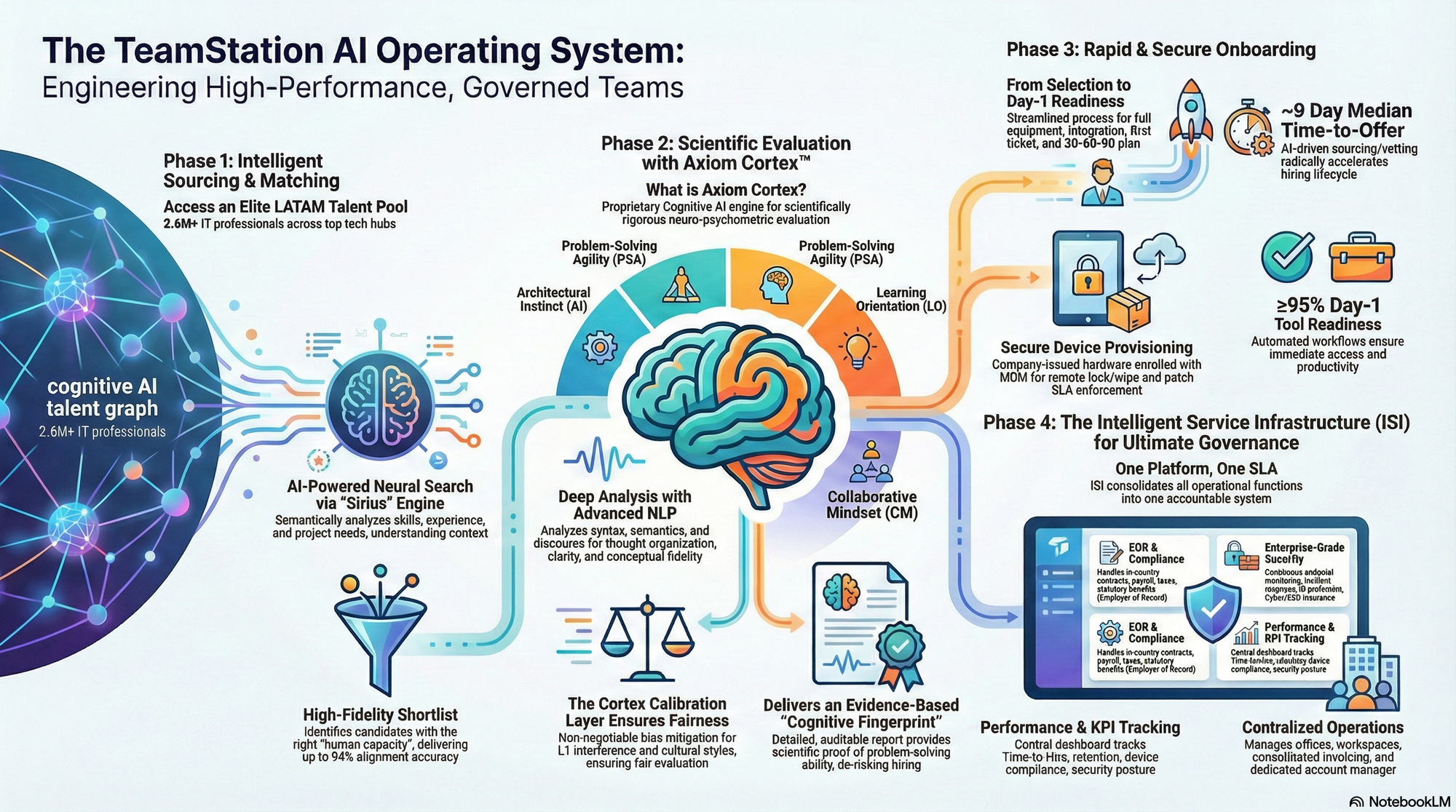

3. The Axiom Cortex: Evaluating the Node

We have established that the team is a probability network. The next step is evaluating the nodes within that network. Traditional hiring relies on "static capacity" markers. Years of experience. Framework lists. Past titles. These lag indicators fail to predict future performance in high-velocity environments.

We utilize the Axiom Cortex™. This is strictly a Neuro-Psychometric Evaluation Engine. It is not a security tool. It is not a firewall. It is a scientific instrument designed to measure the isomorphism between the engineer's mental model and the system state.

Latent Trait Inference Engine (LTIE)

The Axiom Cortex infers critical candidate traits which we quantify and benchmark. This moves beyond binary "qualified/unqualified" judgments to a continuous probability distribution of success. See Axiom Cortex Architecture for the full scientific report.

- Architectural Instinct (AI): Assesses a candidate's ability to think top-down. Can they visualize complex systems before code is written? This is a spatial reasoning trait. It is not a coding trait. Engineers with high AI anticipate failure modes and scalability bottlenecks intuitively. This is critical for system-design Assessment.

- Problem-Solving Agility (PSA): Evaluates how effectively a candidate deconstructs problems. It measures the velocity at which an engineer traverses the solution space when variables change. High PSA correlates with rapid root-cause analysis. See How Fast Can They Find Root Cause.

- Learning Orientation (LO): Measures intellectual honesty and the derivative of skill acquisition. In an era where frameworks have a 2-year half-life, LO is the only durable predictor of relevance. It detects the "Authenticity Incidents" where a candidate admits ignorance.

- Collaborative Mindset (CM): Assesses the tendency to work in a team context. A high-capacity individual with low CM functions as a "Black Box" sink. They absorb resources but radiate little value to the network.

Phasic Micro-Chunking

The operational backbone of Axiom Cortex is Phasic Micro-Chunking. We do not feed the entire candidate profile into the analysis at once. That leads to "Context Bleeding" and "Hallucination." We break the evaluation down into atomic units. We process them in strict isolation.

We create an "Answer Evaluation Unit" (AEU) for each specific response. We isolate Question 1 and Answer 1. We strip away the context of the rest of the interview. We force the engine to evaluate only this specific interaction. This prevents the "Halo Effect." A good answer to Question 1 cannot save a bad answer to Question 5. This rigor is detailed in Human Capacity Spectrum Analysis.

4. The 2026 Topology Models

Based on the physics of sequential probability and the capabilities of AI, we define the optimal Software Engineering Team Topologies for 2026. These are not theoretical. They are the structures required to survive the entropy of distributed work.

The Centaur Model (Human + AI)

We do not believe in replacing humans. We believe in augmenting them. We adhere to the Centaur Model. This concept is derived from chess. Human plus AI beats Human. It also beats AI. This is the new operating system for high-performance engineering.

The engineer shifts from "Syntax Generation" to "Agent Orchestration."

- Old Skill: Writing syntax. Manual debugging.

- New Skill: System architecture. Verifying AI output. Problem decomposition.

The question becomes: Will they survive the next framework shift? Only if they have high Problem Solving Agility. We vet for adaptability. We use the Universal Cognitive Engine to measure how fast a candidate learns a new concept. See Will They Survive The Next Framework Shift.

The Modular Monolith vs. The Distributed Monolith

The fundamental error in modern software architecture is the Fallacy of Decomposition. We assume that breaking a complex system into small parts (microservices) reduces complexity. It does not. It conserves complexity but shifts it from the Local Space to the Global Space.

Most engineering failures happen at the boundary. They happen at the argument list. They happen at the network interface. This leads to Dependency Density. If Node A cannot function without Node B being awake, they are not two services. They are one service broken by a network cable. This is a "Distributed Monolith."

For 2026, we advocate for the Modular Monolith for teams under 50 engineers. You enforce strict boundaries inside the single codebase. You prevent Module A from importing Module B's database models. You gain the benefits of decoupling without paying the tax of the network. See Team Engineering Topologies for topology mathematics.

The Platform Team Topology

We treat the organization as a distributed system. Conway's Law is a constraint. To fix Integration, you often have to fix the Org Chart. We design the organization to match the desired architecture.

We build DevOps & Cloud teams that act as "Platform Teams." They build the internal developer platform (IDP). They do not do the work for the product teams. They build the paved road. This reduces the interaction cost between teams. It turns "Requesting a Server" from a high-friction human interaction into a low-friction machine interaction. This is essential for hire devops-engineering developers.

5. The Economic Reality: Wage Compression and Location

One of the most counterintuitive findings of our sequential model is that the optimal application of AI does not lower wages uniformly. It creates Wage Compression. The internal wage difference between the highest-paid and lowest-paid members of the chain shrinks.

The Paradox of Cheap Talent

Cheap talent is the most expensive talent. In a traditional model, you might try to save money by hiring lower-cost engineers for the middle of the chain. In an AI-augmented chain, this is fatal.

Because the incentives in the middle are naturally eroding due to downstream automation, a worker with a low threshold for effort will almost certainly shirk. The shirking probability (ζ) explodes. The required wage to fix it tends toward infinity. You can have cheap talent, or you can have high reliability. You cannot have both. See Why Cheap Talent Is Expensive.

Geographic Hubs and Cognitive Alignment

Geography is a necessary but insufficient condition. Time zone alignment lowers the Cost of Coordination (c). However, you must align the cognitive topology. We utilize specific country hubs to find the right "Nodes" for the graph.

- Brazil: A powerhouse for Java and Data Engineering. The scale of the domestic market creates engineers who understand high concurrency. See hiring in brazil and java developers in brazil.

- Mexico: The proximity to the US creates a high density of Senior Architects and .NET experts who understand US business culture. See hiring in mexico and net developers in mexico.

- Colombia: A rapidly growing hub for Frontend and Python development. The cultural affinity for agile collaboration is high. See hiring in colombia and python developers in colombia.

- Argentina: Historically strong in creative problem solving and complex backend logic. Excellent for R&D roles. See hiring in argentina.

We do not hire "an engineer." We hire a component of a larger machine. We apply Graph Theory to talent acquisition. See Nebula Search AI.

6. Security and Governance in the 2026 Topology

Security is not a separate team. It is a property of the topology. In the 2026 model, we enforce Full Stack Ownership. The developer carries the pager. When you share the pain, you stop pointing fingers.

The Permission Gap

In distributed nearshore engineering, Mean Time To Recovery (MTTR) is often inflated by the Permission Gap. This is a governance failure where the authority to deploy code is separated from the authority to revert code. This manifests clearly in why distributed engineering teams stay busy but deliver less. See Why Distributed Teams Stay Busy But Deliver Less.

We solve this by enforcing Symmetric Authority via Terraform infrastructure-as-code. If you have the permission to deploy, you must have the permission to rollback. We use "Break Glass" protocols where engineers can elevate their privileges during an incident. Trust is faster than control.

Identity and Access Management

We integrate strictly with enterprise-grade security architectures. We do not rely on manual provisioning. We utilize Single Sign-On (SSO) and Identity Providers (IdP) for all access. We enforce SCIM (System for Cross-domain Identity Management) to ensure that when an engineer leaves, their access is revoked instantly across all systems. This is the only way to manage the risk of What Happens If They Quit Tomorrow.

We mandate the use of Virtual Desktop Infrastructure (VDI) or Mobile Device Management (MDM) for all nearshore nodes. Code never lives on an unmanaged device. This is the standard for Secure Code on a Laptop.

7. Conclusion: The Managerial Directive

The map for US CTOs is clear. The era of the "Warm Body" is over. The era of the "Resume" is over. We are building Software Engineering Team Topologies for 2026 based on the physics of sequential probability.

You must automate the end of the chain where incentives are flat. You must protect the middle of the chain where context matters most. You must evaluate talent using neuro-psychometric inference, not keyword matching. You must treat your team as a graph, not a list.

We hire nodes. We do not hire resumes. Why strong resumes fail is now mathematically obvious. They describe attributes of the node in isolation. They ignore the values of the surrounding graph. The TeamStation AI platform is designed to engineer this graph. It provides the Nearshore IT Co-Pilot to navigate the complexity.

This is not a suggestion. It is a constraint imposed by the physics of the O-Ring Invariant. The cost of ignoring it is obsolescence. The reward for embracing it is a team that scales exponentially rather than linearly. Read Nearshore Platformed to understand the full economic implications of this shift.

References & Further Reading

- Nearshore Platformed. Nearshore Platformed: AI and Industry Transformation

- Sequential Effort Incentives. Sequential Effort Incentives

- Axiom Cortex Architecture. Axiom Cortex Architecture

- Nearshore Platform Economics. Nearshore Platform Economics

- Who Gets Replaced and Why. Who Gets Replaced and Why

- Human Capacity Spectrum Analysis. Human Capacity Spectrum Analysis